Summer 2019

Published: 2019-07-08 (last updated: 2024-10-11)

Working at robur

As announced previously, I started to work at robur early 2018. We're a collective of five people, distributed around Europe and the US, with the goal to deploy MirageOS unikernels. We do this by developing bespoke MirageOS unikernels which provide useful services, and deploy them for ourselves. We also develop new libraries and enhance existing ones and other components of MirageOS. Example unikernels include our website which uses Canopy, a CalDAV server that stores entries in a git remote, and DNS servers (the latter two are further described below).

Robur is part of the non-profit company Center for the Cultivation of Technology, who are managing the legal and administrative sides for us. We're ourselves responsible to acquire funding to pay ourselves reasonable salaries. We received funding for CalDAV from prototypefund and further funding from Tarides, for TLS 1.3 from OCaml Labs; security-audited an OCaml codebase, and received donations, also in the form of Bitcoins. We're looking for further funded collaborations and also contracting, mail us at team@robur.io. Please donate (tax-deductible in EU), so we can accomplish our goal of putting robust and sustainable MirageOS unikernels into production, replacing insecure legacy system that emit tons of CO2.

Deploying MirageOS unikernels

While several examples are running since years (the MirageOS website, Bitcoin Piñata, TLS demo server, etc.), and some shell-scripts for cloud providers are floating around, it is not (yet) streamlined.

Service deployment is complex: you have to consider its configuration, exfiltration of logs and metrics, provisioning with valid key material (TLS certificate, hmac shared secret) and authenticators (CA certificate, ssh key fingerprint). Instead of requiring millions lines of code during orchestration (such as Kubernetes), creating the images (docker), or provisioning (ansible), why not minimise the required configuration and dependencies?

Earlier in this blog I introduced Albatross, which serves in an enhanced version as our deployment platform on a physical machine (running 15 unikernels at the moment), I won't discuss more detail thereof in this article.

CalDAV

Steffi and I developed in 2018 a CalDAV server. Since November 2018 we have a test installation for robur, initially running as a Unix process on a virtual machine and persisting data to files on the disk. Mid-June 2019 we migrated it to a MirageOS unikernel, thanks to great efforts in git and irmin, unikernels can push to a remote git repository. We extended the ssh library with a ssh client and use this in git. This also means our CalDAV server is completely immutable (does not carry state across reboots, apart from the data in the remote repository) and does not have persistent state in the form of a block device. Its configuration is mainly done at compile time by the selection of libraries (syslog, monitoring, ...), and boot arguments passed to the unikernel at startup.

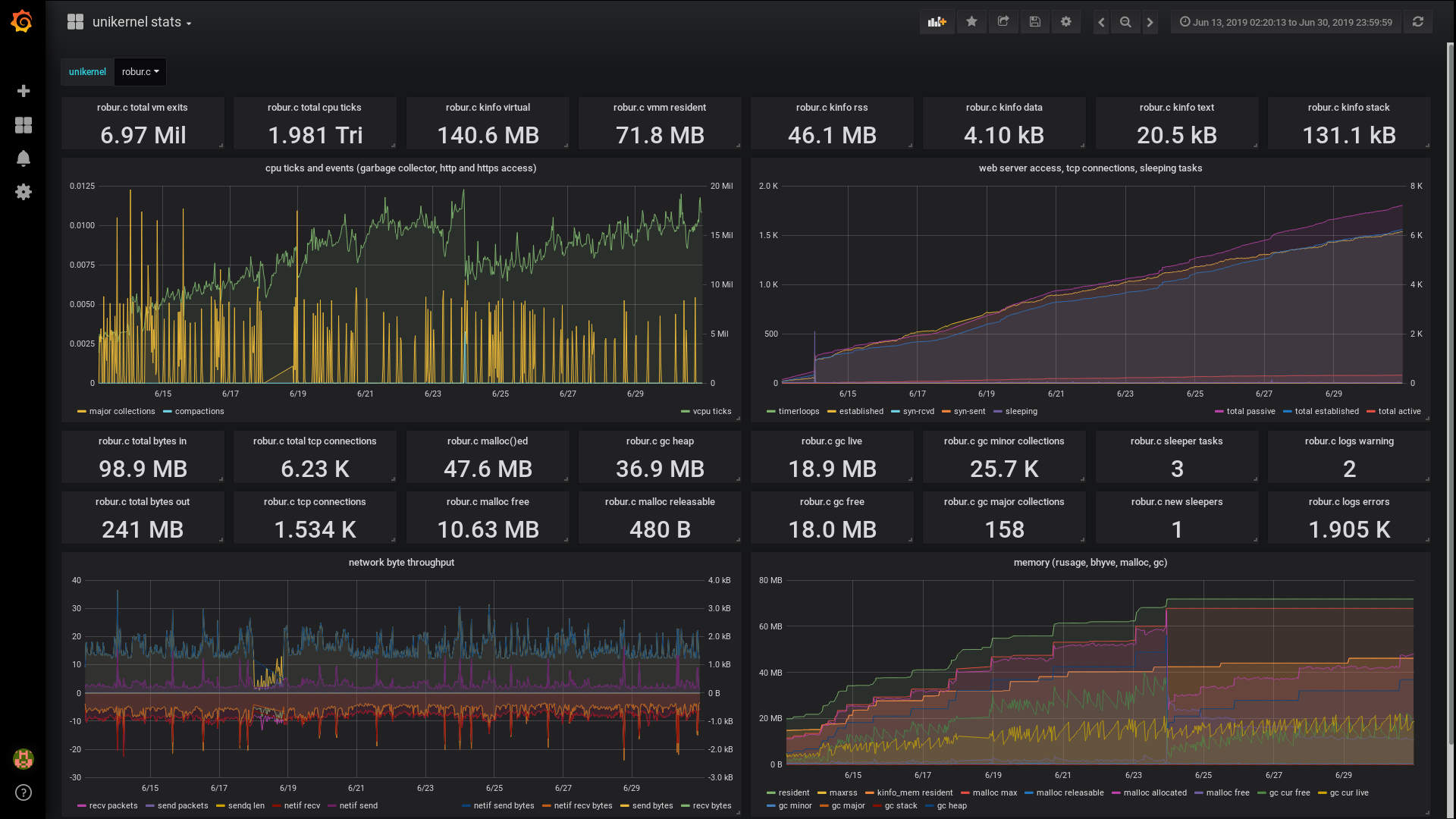

We monitored the resource usage when migrating our CalDAV server from Unix process to a MirageOS unikernel. The unikernel size is just below 10MB. The workload is some clients communicating with the server on a regular basis. We use Grafana with a influx time series database to monitor virtual machines. Data is collected on the host system (rusage sysctl, kinfo_mem sysctl, ifdata sysctl, vm_get_stats BHyve statistics), and our unikernels these days emit further metrics (mostly counters: gc statistics, malloc statistics, tcp sessions, http requests and status codes).

Please note that memory usage (upper right) and vm exits (lower right) use logarithmic scale. The CPU usage reduced by more than a factor of 4. The memory usage dropped by a factor of 25, and the network traffic increased - previously we stored log messages on the virtual machine itself, now we send them to a dedicated log host.

A MirageOS unikernel, apart from a smaller attack surface, indeed uses fewer resources and actually emits less CO2 than the same service on a Unix virtual machine. So we're doing something good for the environment! :)

Our calendar server contains at the moment 63 events, the git repository had around 500 commits in the past month: nearly all of them from the CalDAV server itself when a client modified data via CalDAV, and two manual commits: the initial data imported from the file system, and one commit for fixing a bug of the encoder in our icalendar library.

Our CalDAV implementation is very basic, scheduling, adding attendees (which requires sending out eMail), is not supported. But it works well for us, we have individual calendars and a shared one which everyone can write to. On the client side we use macOS and iOS iCalendar, Android DAVdroid, and Thunderbird. If you like to try our CalDAV server, have a look at our installation instructions. Please report issues if you find issues or struggle with the installation.

DNS

There has been more work on our DNS implementation, now here. We included a DNS client library, and some example unikernels are available. They as well require our opam repository overlay. Please report issues if you run into trouble while experimenting with that.

Most prominently is primary-git, a unikernel which acts as a primary authoritative DNS server (UDP and TCP). On startup, it fetches a remote git repository that contains zone files and shared hmac secrets. The zones are served, and secondary servers are notified with the respective serial numbers of the zones, authenticated using TSIG with the shared secrets. The primary server provides dynamic in-protocol updates of DNS resource records (nsupdate), and after successful authentication pushes the change to the remote git. To change the zone, you can just edit the zonefile and push to the git remote - with the proper pre- and post-commit-hooks an authenticated notify is send to the primary server which then pulls the git remote.

Another noteworthy unikernel is letsencrypt, which acts as a secondary server, and whenever a TLSA record with custom type (0xFF) and a DER-encoded certificate signing request is observed, it requests a signature from letsencrypt by solving the DNS challenge. The certificate is pushed to the DNS server as TLSA record as well. The DNS implementation provides ocertify and dns-mirage-certify which use the above mechanism to retrieve valid let's encrypt certificates. The caller (unikernel or Unix command-line utility) either takes a private key directly or generates one from a (provided) seed and generates a certificate signing request. It then looks in DNS for a certificate which is still valid and matches the public key and the hostname. If such a certificate is not present, the certificate signing request is pushed to DNS (via the nsupdate protocol), authenticated using TSIG with a given secret. This way our public facing unikernels (website, this blog, TLS demo server, ..) block until they got a certificate via DNS on startup - we avoid embedding of the certificate into the unikernel image.

Monitoring

We like to gather statistics about the resource usage of our unikernels to find potential bottlenecks and observe memory leaks ;) The base for the setup is the metrics library, which is similarly in design to the logs library: libraries use the core to gather metrics. A different aspect is the reporter, which is globally registered and responsible for exfiltrating the data via their favourite protocol. If no reporter is registered, the work overhead is negligible.

This is a dashboard which combines both statistics gathered from the host system and various metrics from the MirageOS unikernel. The monitoring branch of our opam repository overlay is used together with monitoring-experiments. The logs errors counter (middle right) was the icalendar parser which tried to parse its badly emitted ics (the bug is now fixed, the dashboard is from last month).

OCaml libraries

The domain-name library was developed to handle RFC 1035 domain names and host names. It initially was part of the DNS code, but is now freestanding to be used in other core libraries (such as ipaddr) with a small dependency footprint.

The GADT map is a normal OCaml Map structure, but takes key-dependent value types by using a GADT. This library also was part of DNS, but is more broadly useful, we already use it in our icalendar (the data format for calendar entries in CalDAV) library, our OpenVPN configuration parser uses it as well, and also x509 - which got reworked quite a bit recently (release pending), and there's preliminary PKCS12 support (which deserves its own article). TLS 1.3 is available on a branch, but is not yet merged. More work is underway, hopefully with sufficient time to write more articles about it.

Conclusion

More projects are happening as we speak, it takes time to upstream all the changes, such as monitoring, new core libraries, getting our DNS implementation released, pushing Conex into production, more features such as DNSSec, ...

I'm interested in feedback, either via twitter hannesm@mastodon.social or via eMail.